Introduction

- Eco City is an educational turn-based simulator game where you play as a mayor of a city, managing resources and making decisions each turn to avoid game over.

- Each turn, three city policies are drawn with some randomness. Any one policy can be either accepted or rejected with respective effects, and then the turn ends and the effects applied along with background simulation.

- A DQN agent is trained to play and reveal imbalances in the game design.

Methods

- We model the game as an RL environment with state, actions and rewards. The agent is rewarded for every consecutive turn survived. Randomness is reproduced using environment seeding.

- A DQN agent is trained for 1000 episodes using experience replay and a single neural network for estimating Q-values with 2 hidden layers of 32 neurons each.

- Its performance and behavior is compared to a completely random agent. Decision trends are analyzed.

Results

- After training, the agent was able to survive for 18.9 turns (random agent: 2.5 turns) on average over a total of 2000 runs.

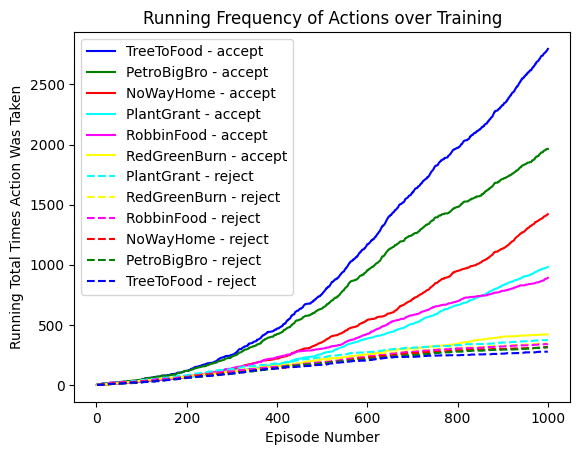

- Dominant strategies emerged over the course of training. Notably, the agent rejected policies less over successive episodes. After training, the agent exclusively accepted policies.

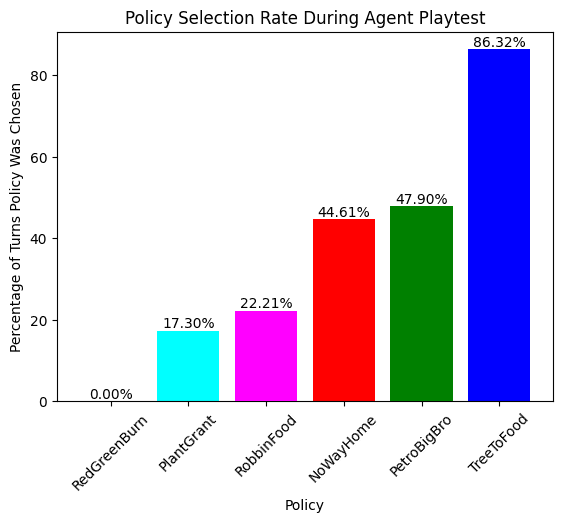

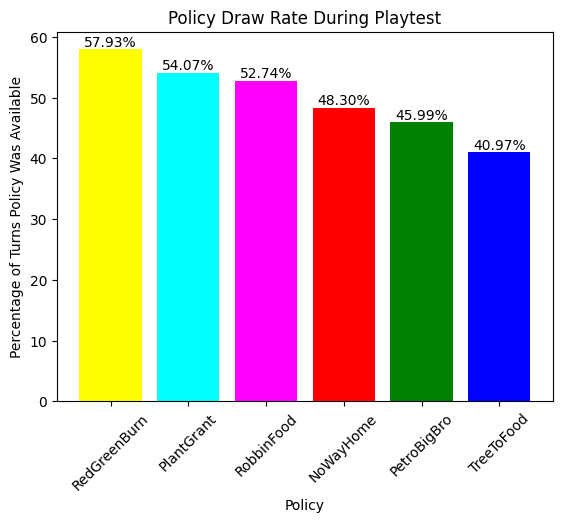

- Some policies were overpowered (almost always chosen) and some were underpowered (almost never chosen).

Conclusions

- DQN agent was able to gain proficiency to a degree sufficient for playtesting, with a reasonably small neural network (2x32 hidden nodes) and few training steps (<10000).

- Clear imbalances in game design were revealed from agent behavior during playtesting, with no need for human players.

- Rebalancing of parameters visible in agent observed state would not require retraining.

An Autonomous Player Agent for Game Balance

Insight on an Educational Video Game

Ahsan Imam Istamar

Terence

Samuel Philip

Hidayaturrahman

An AI playtester exposed major imbalances in a complex game's design using Reinforcement Learning.

Hyperparameters

Train Seeds

[0..999]

Test Seeds

[1000..1999]

Learning Rate

0.01

Discount Factor

0.99

Epsilon Max

1

Epsilon Min

0.1

Epsilon Decay

0.998